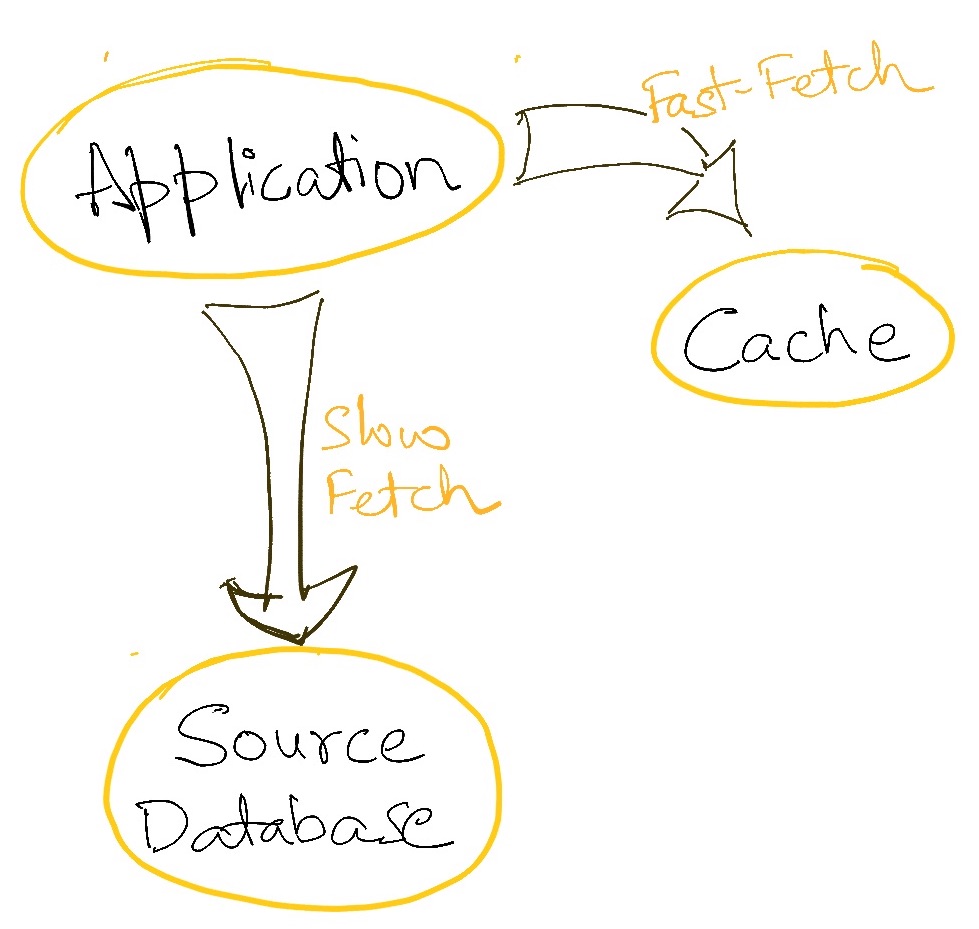

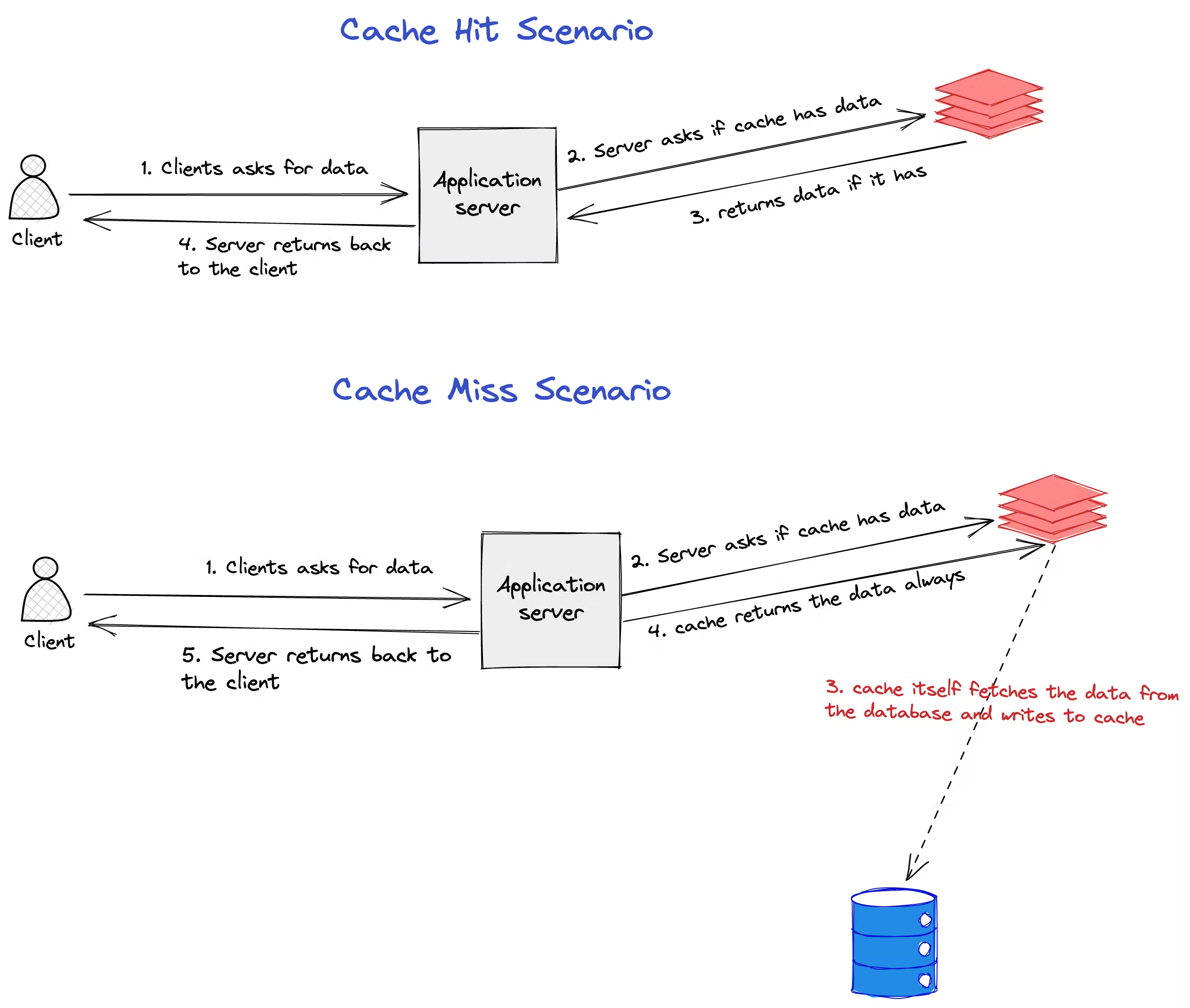

Cache Patterns - Web enter caching — a technique employed to store frequently accessed data in ‘near’ storage locations, thereby reducing the time required to fetch it. The patterns you choose to implement should be directly related to your caching and application objectives. The first, and perhaps simplest, caching design pattern is local browser caching. The first caching choice you need to. If the data is found in the cache (a cache hit), it’s returned immediately. When we access to a stored information in any kind of data storage, we try to get it from the cache. Laziness should serve as the foundation of any good caching strategy. This not only reduces latency but also improves the overall responsiveness. Caching design patterns outline specific ways to organize and manage caching process, providing guidelines on when and how to store and retrieve data efficiently. The basic idea is to populate the cache only when an object is actually requested by the application.

Architecture Patterns Caching (Part1) Kislay Verma (2022)

Caching design patterns outline specific ways to organize and manage caching process, providing guidelines on when and how to store and retrieve data efficiently. Web.

Quilters Cache Free Patterns You Can Use This Block To Craft A Pillow

Web caching design patterns are structured methods used by developers to optimize the performance of applications by employing caching strategies. For instance, suppose a user.

Quilters Cache Free Patterns You Can Use This Block To Craft A Pillow

If the data exists into the cache it is directly sent back to the application and this event is called a cache hit. Streaming data.

Quilting Cache Patterns FREE Quilt Patterns

It caches data by temporarily copying frequently accessed data to fast storage that's located close to the application. Web cache patterns involve storing frequently accessed.

Modern Caching 101 What Is InMemory Cache, When and How to Use It

Forrester names hazelcast as a strong performer. This not only reduces latency but also improves the overall responsiveness. Caching design patterns outline specific ways to.

Understanding the caching design pattern HandsOn Design Patterns

Streaming data platforms, q4 2023. Web the three main choices are: If done right, caches can reduce response times, decrease load on database, and save.

Cache distribution pattern in a threetier web architecture Solutions

There are several strategies and choosing the right one can. The first caching choice you need to. Let's discuss each of these in order. Web.

Caching Patterns Lokesh Sanapalli A pragmatic software engineering

At the core of this approach are the challenges. Let’s understand this from another perspective! The basic idea is to populate the cache only when.

Design Patterns CacheAside Pattern

A private cache is a cache tied to a specific client — typically a browser cache. Laziness should serve as the foundation of any good.

The Overall Application Flow Goes Like This:

Since the stored response is not shared with other clients, a private cache can store a personalized response for that user. Web apache kafka and hazelcast. Web enter caching — a technique employed to store frequently accessed data in ‘near’ storage locations, thereby reducing the time required to fetch it. If it exists, we retrieve the data from the.

The First Caching Choice You Need To.

Web caching design patterns are structured methods used by developers to optimize the performance of applications by employing caching strategies. Private caches and shared caches. Laziness should serve as the foundation of any good caching strategy. Let's discuss each of these in order.

Web Caching Data Is A Useful Pattern For Any Application That Needs To Serve High Traffic And Finds Itself With Latency Requirements Incompatible With The Selected Persistence Choice.

Databases can be slow (yes even the nosql ones) and as you already know, speed is the name of the game. Streaming data platforms, q4 2023. This not only reduces latency but also improves the overall responsiveness. There are several strategies and choosing the right one can.

If The Data Is Found In The Cache (A Cache Hit), It’s Returned Immediately.

Caching too little leads to longer latencies, higher database load, and the need to provision more and more storage. At the core of this approach are the challenges. When we access to a stored information in any kind of data storage, we try to get it from the cache. Let’s understand this from another perspective!